Together, our Airflow and Celery integrations can help you gain a complete picture of your workflow performance as you monitor Airflow metrics and distributed traces. This helps surface, for instance, where your DAG runs are experiencing latency. This means you can get visibility into the performance of your distributed workflows, for example with flame graphs that trace tasks executed by Celery workers as they propagate across your infrastructure. Datadog APM supports the Celery library, so you can easily trace your tasks. Dig deeper with Datadog APMĪirflow relies on the background job manager Celery to distribute tasks across multi-node clusters. With Datadog, you can create an alert to notify you if the amount of tasks running in a DAG is about to surpass your concurrency limit and cause your queue to inflate and potentially slow down workflow execution. This may lead your queue to balloon with backed-up tasks. Large and complex workflows might risk reaching the limit of Airflow’s concurrency parameter, which dictates how many tasks Airflow can run at once. After tasks have been scheduled and added to a queue, they will remain idle until they are run by an Airflow worker.

#AIRFLOW API SERIES#

Keep tabs on queued tasksīefore an Airflow task completes successfully, it goes through a series of stages including scheduled, queued, and running. If you notice unusually long delays, the Airflow documentation recommends improving scheduler latency by increasing your DAG’s max_threads and scheduler_heartbeat_sec during configuration. When DAG runs are delayed they can slow down your workflows, causing you to potentially miss service level agreements (SLAs). Live wind, rain, radar or temperature maps, more than 50 weather layers, detailed forecast for your place, data from the best weather forecast models with. For instance, the metric _delay provides you with the duration of time between when a DAG run is supposed to start and when it actually starts. To add context to incoming duration metrics, Datadog’s DogStatsD Mapper feature tags your DAG duration metrics with task_id and dag_id so you can surface particularly slow tasks and DAGs.įor further insight into workflow performance, you can also track metrics from the Airflow scheduler. You can use the .avg metric to monitor the average time it takes to complete a task and help you determine if your DAG runs are lagging or close to timing out. Having an increasing number of concurrent DAG Runs may lead to Airflow reaching the max_active_runs limit, causing it to stop scheduling new DAG runs and possibly leading to a timeout of currently scheduled workflows.

The higher the latency of your DAG Runs, the more likely subsequent DAG Runs will start before previous ones have finished executing. Ideally, the scheduler will execute tasks on time and without delay. The scheduler then attempts to execute every task within an instantiated DAG (referred to as a DAG Run) in the appropriate order based on each task’s dependencies. An Airflow scheduler monitors your DAGs and initiates them based on their schedule. DAGs define the relationships and dependencies between tasks.

Ensure your DAGs don’t dragĪirflow represents each workflow as a series of tasks collected into a DAG.

#AIRFLOW API HOW TO#

The full Airflow DAG itself I won’t post, but in the excerpt below I show how to use the filename in the DAG.As soon as you enable our Airflow integration, you will see key metrics like DAG duration and task status populating an out-of-the-box dashboard, so you can get immediate insight into your Airflow-managed workloads. The nice thing here is that I’m actually passing the filename of the new file to Airflow, which I can use in the DAG lateron. You need to adjust the AIRFLOW_URL, DAG_NAME, AIRFLOW_USER, and AIRFLOW_PASSWORD. Var request = require ( 'request' ) module.

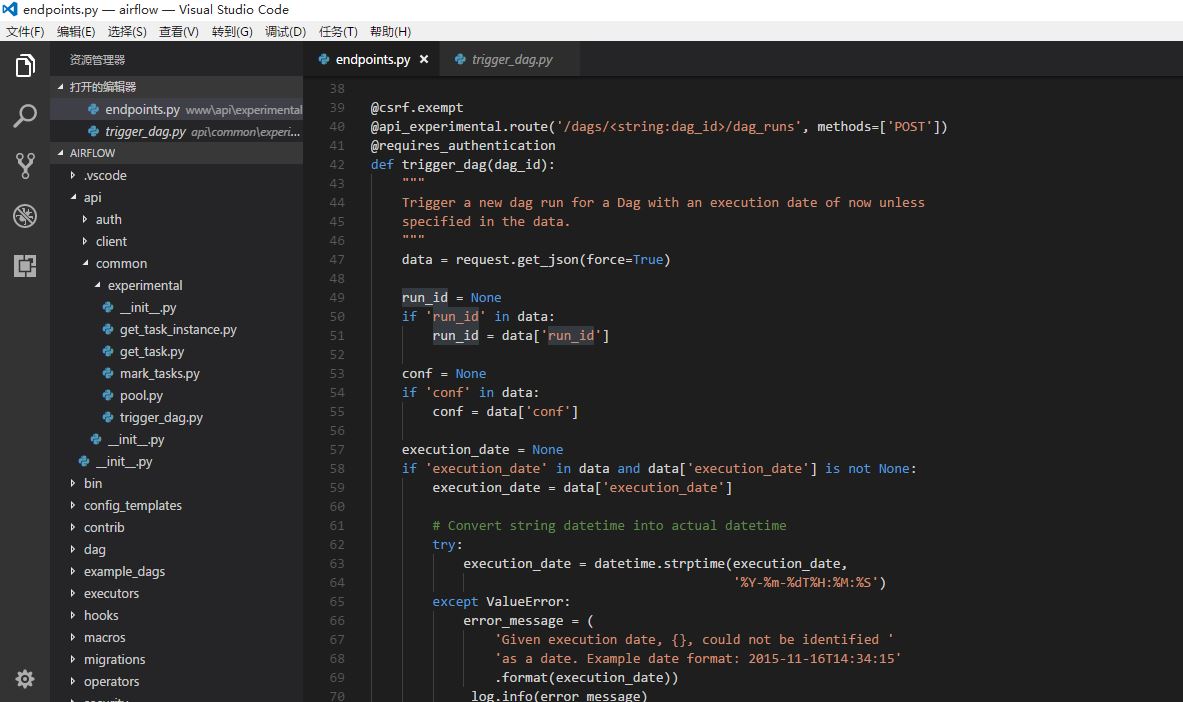

Something you should only use over HTTPS.Įnabling the password_auth backend is a small change to your Airflow config file: There are multiple options available, in this blogpost we use the password_auth backend which implements HTTP Basic Authentication. In this blog post we will use it to trigger a DAG.īy default the experimental API is unsecured, and hence before we continue we should define an auth_backend which secures it. The experimental API allows you to fetch information regarding dags and tasks, but also trigger and even delete a DAG. In this blog post, I will show how we use Azure Functions to trigger a DAG when a file is uploaded to a Azure Blob Store. This comes in handy if you are integrating with cloud storage such Azure Blob store.īecause although Airflow has the concept of Sensors, an external trigger will allow you to avoid polling for a file to appear. The Airflow experimental api allows you to trigger a DAG over HTTP.

0 kommentar(er)

0 kommentar(er)